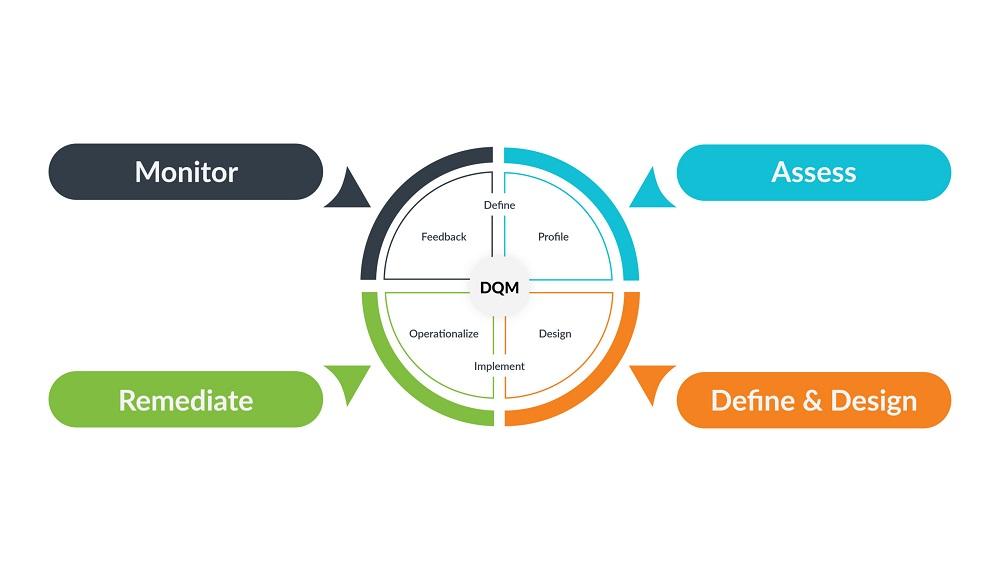

The software that enables organizations to transform their raw, messy data into a high-quality, trusted asset is a sophisticated and multi-faceted system. A modern Data Quality Management Market Platform is an integrated suite of tools designed to manage the end-to-end data quality lifecycle, acting as a digital refinery for an organization's information. The architecture of such a platform is typically modular, allowing different capabilities to be applied at different stages of a data pipeline. The journey almost always begins with the Data Profiling and Discovery module. This is the initial diagnostic tool. It connects to a wide range of data sources—from relational databases and data warehouses to cloud data lakes and flat files—and performs a deep, statistical analysis of the data's content and structure. It automatically discovers metadata, identifies data types, and calculates metrics like null counts, frequency distributions of values, and conformity to standard patterns (e.g., email or phone number formats). This profiling process generates a detailed "health report" for the data, providing data analysts and stewards with a clear, quantitative understanding of the quality issues they need to address before any cleansing begins.

Once the quality issues have been identified, the Data Cleansing, Standardization, and Enrichment module comes into play. This is the "heavy-lifting" component of the platform where the data is actively repaired and improved. This module provides a rich, often graphical, interface for building data quality "rules" and transformations. Cleansing involves correcting inaccuracies, such as fixing spelling mistakes in city names or replacing invalid characters. Standardization is the process of converting data into a consistent and preferred format. This could involve parsing a full name field into separate first, middle, and last name fields, or standardizing all address records to a specific postal service format (e.g., converting "St." to "Street" and "Apt" to "Apartment"). Enrichment is the process of enhancing the data by appending information from external, authoritative sources. For example, a DQM platform could take a customer address, validate it against a postal service database, and enrich the record by adding a corrected ZIP+4 code or a latitude/longitude coordinate. This entire process transforms raw, inconsistent data into a clean, standardized, and more valuable information asset.

A third critical architectural pillar is the Data Matching, Linking, and Survivorship module. This is one of the most complex and powerful capabilities of a DQM platform, designed to solve the pervasive problem of duplicate records. This module uses a combination of deterministic rules (e.g., "match if email address and last name are identical") and more advanced probabilistic or "fuzzy" matching algorithms to identify records across different systems that likely refer to the same real-world entity, even if the data is not an exact match (e.g., "Jon Smith" vs. "Jonathan Smyth"). Once duplicate records are identified, the platform links them together into a cluster. The survivorship process then applies a set of configurable rules to intelligently merge the best attributes from all the duplicate records into a single, consolidated "golden record." For example, it might take the most recent phone number, the most complete address, and the most formally written name to create the best possible version of that customer's record. This ability to de-duplicate and create a single source of truth is essential for achieving a 360-degree view of customers, products, or suppliers.

Finally, a comprehensive DQM platform is architected for ongoing governance and collaboration through its Data Monitoring and Stewardship module. Data quality is not a one-time project; it is a continuous process. This module allows data quality rules to be deployed in a monitoring mode, where they continuously assess the quality of data as it flows through the organization's systems. It provides interactive dashboards and scorecards that allow business leaders to track data quality KPIs over time and measure the business impact of their DQM initiatives. When the monitoring process detects a data quality issue that cannot be resolved automatically, the stewardship functionality comes into play. The platform provides a web-based workflow application where these data exceptions are automatically routed to the designated "data steward"—a business user who is responsible for that particular data domain. The steward can then review the issue, make a correction, and approve the change, with all actions being logged in a complete audit trail. This collaborative workflow embeds data quality responsibilities directly into the business, creating a culture of data ownership and continuous improvement across the enterprise.

Top Trending Reports:

Proximity Access Control Market